- Install mpi on windows install#

- Install mpi on windows 64 Bit#

- Install mpi on windows drivers#

- Install mpi on windows software#

- Install mpi on windows professional#

In this case we shall set it up manually. If the installer reports a failure when trying to do so, then don’t worry, we can do it manually as well.įor me the installer failed to setup any PATH variables, stating that my PATH was too long (not true). If the installer asks to setup PATH variables then do so for all users. The Open MPI website states that this a temporary issue with their installer that should be fixed in time. I also changed the installation path to “Program Files” instead of the default “Program Files (x86)”.

Install mpi on windows 64 Bit#

Note that I downloaded the 64 bit version.

Install mpi on windows install#

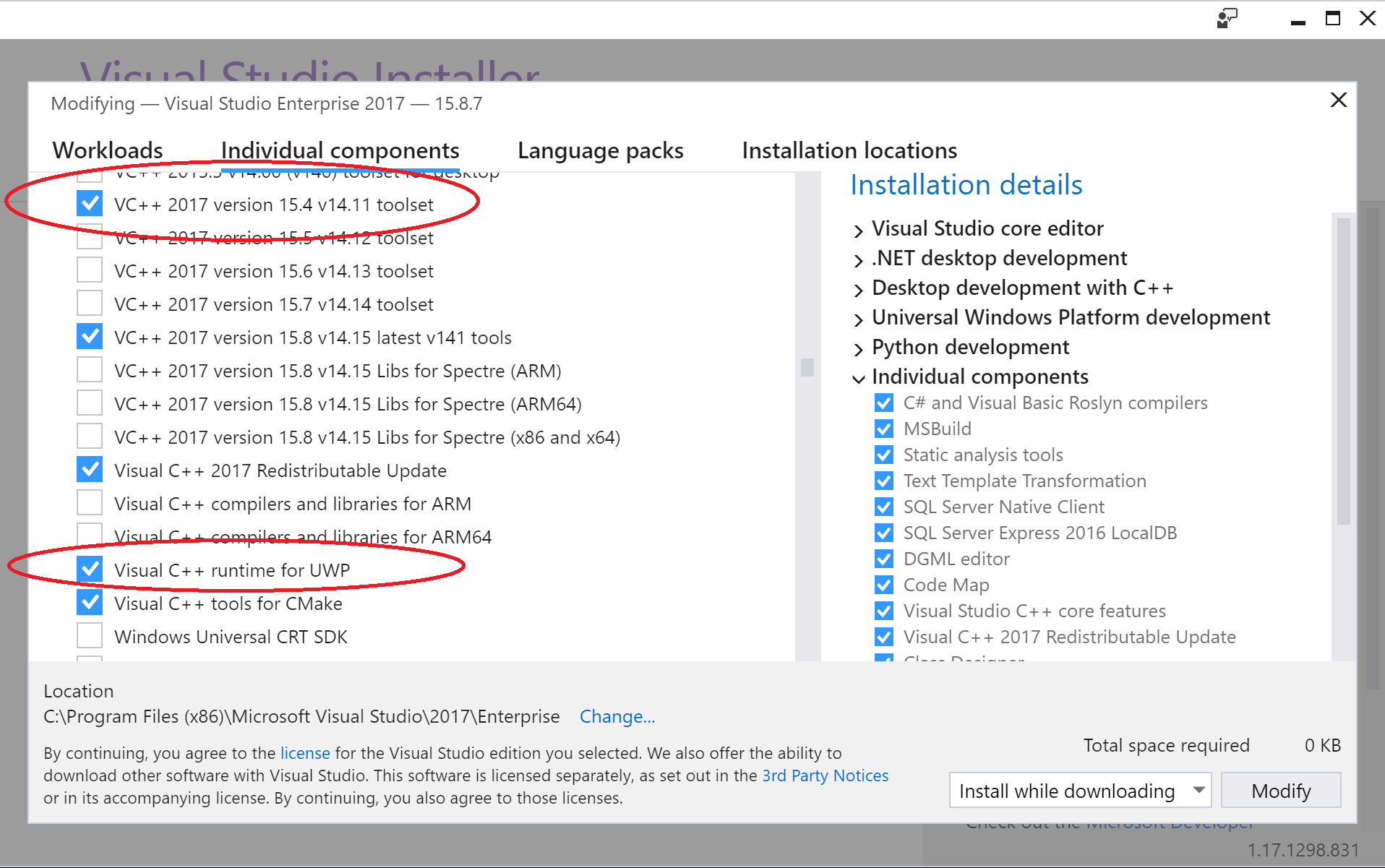

Any version of Visual Studio should do, all we really need is the Microsoft C++ compiler and related tools that Open MPI uses.ĭownload and install the latest version of Open MPI. This tutorial does not make direct use of Visual Studio.

Install mpi on windows professional#

Install mpi on windows software#

The HPC-X software toolkit contains UCX and HCOLL and can be built against UCX. For more information, see Troubleshooting known issues with HPC and GPU VMs on running MPI over InfiniBand when Accelerated Networking is enabled on the VM. Recent builds of UCX have fixed an issue whereby the right InfiniBand interface is chosen in the presence of multiple NIC interfaces. It is optimized for MPI communication over InfiniBand and works with many MPI implementations such as OpenMPI and MPICH. Unified Communication X (UCX) is a framework of communication APIs for HPC. The following figure illustrates the architecture for the popular MPI libraries. Similarly, Intel MPI, MVAPICH, and MPICH are ABI compatible. Additionally, HPC-X and OpenMPI are ABI compatible, so you can dynamically run an HPC application with HPC-X that was built with OpenMPI. Overall, the HPC-X MPI performs the best by using the UCX framework for the InfiniBand interface, and takes advantage of all the Mellanox InfiniBand hardware and software capabilities. If you have flexibility regarding which MPI you can choose, and you want the best performance, try HPC-X. If an HPC application recommends a particular MPI library, try that version first. We recommend using the latest stable versions of the packages, or referring to the azhpc-images repo. More examples for setting up other MPI implementations on others distros is on the azhpc-images repo. Though the examples here are for RHEL/CentOS, but the steps are general and can be used for any compatible Linux operating system such as Ubuntu (16.04, 18.04 19.04, 20.04) and SLES (12 SP4 and 15).

Install mpi on windows drivers#

These VM images come optimized and pre-loaded with the OFED drivers for RDMA and various commonly used MPI libraries and scientific computing packages and are the easiest way to get started. Later versions (2017, 2018) of the Intel MPI runtime library may or may not be compatible with the Azure RDMA drivers.įor SR-IOV enabled RDMA capable VMs, CentOS-HPC VM images version 7.6 and later are suitable. Hence, only Microsoft MPI (MS-MPI) 2012 R2 or later and Intel MPI 5.x versions are supported.

HPC workloads on the RDMA capable H-series and N-series VMs can use MPI to communicate over the low latency and high bandwidth InfiniBand network. It is commonly used across many HPC workloads. The Message Passing Interface (MPI) is an open library and de-facto standard for distributed memory parallelization. Applies to: ✔️ Linux VMs ✔️ Windows VMs ✔️ Flexible scale sets ✔️ Uniform scale sets

0 kommentar(er)

0 kommentar(er)